We used a new Evaluation Framework for our latest Product Evaluation Report, which is about Salesforce Service Cloud. We introduced the new Framework to make our reports shorter and more easily actionable. Shorter for sure, our previous report on Service Cloud was 57 pages including illustrations. This one is 22 pages including illustrations, shorter by more than 60 percent!

We don’t yet know whether the Report is more easily actionable. It was just published. But, our approach to its writing was to minimize descriptions and to bring to the front our most salient analyses, conclusions, and recommendations.

Why?

Our Product Evaluation Reports had become increasingly valuable but to fewer readers. Business analysts facing a product selection decision, analysts for bankers and venture capitalists considering an investment decision, and suppliers’ competitive intelligence staff keeping up with the industry have always appreciated the reports, especially their depth and detail.

However, suppliers, whose products were the subjects of the reports, complained about their length and depth. Requests for more time to review the reports have become the norm, extending our publishing cycle. Then, when we finally get their responses, we’d see heavy commenting at the beginning of the reports but light commenting and no commenting at the end, as if they lost interest. Our editors have made the same complaints.

More significantly, readership, actually reading in general, is way down. Fewer people read…anything. These days, people want information in very small bites. Getting personal, for example, I loved Ron Chernow’s 800-page Hamilton, but I have spoken to so many who told me that it was too long. They couldn’t get through it and put it down unfinished, or, more typically, they wouldn’t even start it. I’m by no means comparing my Product Evaluation Reports to this masterpiece about American history. I’m just trying to emphasize the point.

Shorter Reports, No Less Research

While the Product Evaluation Report on Salesforce Service Cloud was 60 percent shorter, our research to write it was the same as our research for those previous, much longer Product Evaluation Reports. Our approach to research still has these elements, listed in order of increasing importance:

- Supplier presentations and demonstrations

- Supplier web content: web site, user and developer communities

- Supplier SEC filings, especially Forms 10Q and 10K

- Patent documentation, if appropriate

- Product documentation, the manuals for administrators, users, and developers

- Product trial

Product documentation and product trial are the most important research elements and we spend most of our research time in these two areas. Product documentation, the “manuals” for administrators, users, and developers provides complete, actual, accurate, and spin-less descriptions of how to setup and configure a product, of what a product does—its services and data, and of how it works. Product trials give us the opportunity to put our hands on a product and try it out for customer service tasks.

What’s In?

The new Framework has these four top-level evaluation criteria:

- Customer Service Apps list and identify the key capabilities of the apps included in or, via features and/or add-ons, added to a customer service software product.

- Channels, Devices, Languages list supported assisted-service and self-service channels, devices attachable to those channels, and languages that agents and customers may use to access the customer service apps on those devices.

- Reporting examines the facilities to measure and present information about a product’s usage, performance, effectiveness, and efficiency. Analysts use this information continually to refine their customer service product deployments.

- Product, Supplier, Offer. Product examines the history, release cycle, development plans, and customer base for a customer service product. They’re the factors that determine product viability. Supplier examines the factors that determine the supplier’s viability. Offer examines the supplier’s markets for the product and the product’s packaging and pricing.

This is the information that we use to evaluate a customer service product.

What’s Missing?

Technology descriptions and their finely granular analyses are out. For example, the new reports do not include tables listing and describing the attributes/fields of the data models for key customer service objects/records like cases and knowledge items or listing and describing the services that products provide for operating on those data models to perform customer service tasks. The new reports do not present analyses of individual data model attributes or individual services, either. Rather, the reports present a coarsely granular analysis of data models and services with a focus on strengths, limitations, and differentiators. We explain why data models might be rich and flexible or we identify important, missing types, attributes, and relationships then summarize the details that support our analysis.

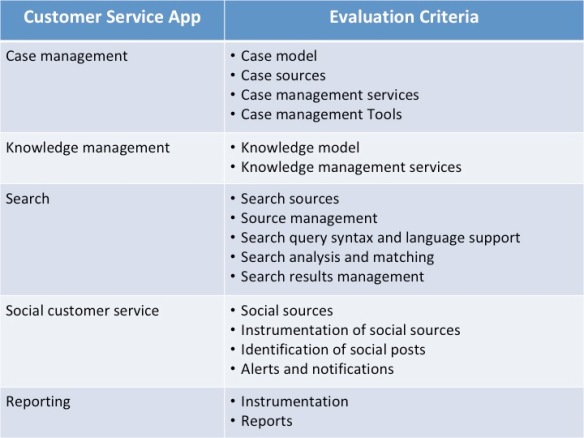

“Customer Service Technologies” comprised more than half the evaluation criteria of the previous Framework and two thirds of the content of our previous Framework-based reports. These criteria described and analyzed case management, knowledge management, findability, integration, and reporting and analysis. For example, within case management, we examined case model, case management service, case sources, and case management tools. They’re out in the new version and they’re the reason the reports are shorter. But, they’re they basis of our analysis of the Customer Service Apps criterion. If a product has a rich case model and a large set of case management services, then rich case model and large set of case management services will be listed among the case management apps key capabilities in our Customer Services Apps Table and we’ll explain why we listed them in the analysis following the Table. On the other hand, if a product’s case model is limited, then case model will be absent from the Table’s list of key capabilities and we’ll call out the limitations in our analysis. Just a reminder, our bases for the evaluation of the Customer Service Apps criteria, the subcriteria of Technologies for the old Framework are shown in the Table below:

Table 1. We present the bases for the evaluation of the Customer Service App criteria in this Table.

Table 1. We present the bases for the evaluation of the Customer Service App criteria in this Table.

Trustworthy Analysis

We had always felt that we had to demonstrate that we understood a technology to justify our analysis of that technology. We had also felt that you wanted and needed our analysis of all of that technology at the detailed level of every individual data attribute and service. You have taught us that you’d prefer higher-level analyses and low-level detail only to understand the most salient strengths, limitations, and differentiators.

The lesson that we’ve learned from you can be found in a new generation of Product Evaluation Reports. Take a look at our latest Report, our evaluation of Salesforce Service Cloud and let us know if we’ve truly learned that lesson.

Remember, though, if you need more detail, then ask us for it. We’ve done the research.

Figure 1. This screen shot shows the steps in the Author phase of the knowledge management process.

Figure 1. This screen shot shows the steps in the Author phase of the knowledge management process. Figure 2. This screen shot shows the Interactive Service Hub display of an existing Case.

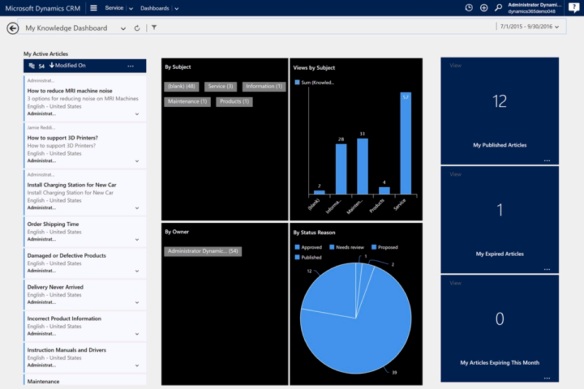

Figure 2. This screen shot shows the Interactive Service Hub display of an existing Case. Figure 3. This screen shot shows an example of the My Knowledge Dashboard.

Figure 3. This screen shot shows an example of the My Knowledge Dashboard.